StyleCrafter: Enhancing Stylized Text-to-Video Generation with Style Adapter

Supplementary Material

- Comparison to Baselines(Single Reference)

- Comparison to Baselines(Multiple Reference)

- Ablation Study

- StyleCrafter Combined with Depth Control

- Additional Results of StyleCrafter

- References

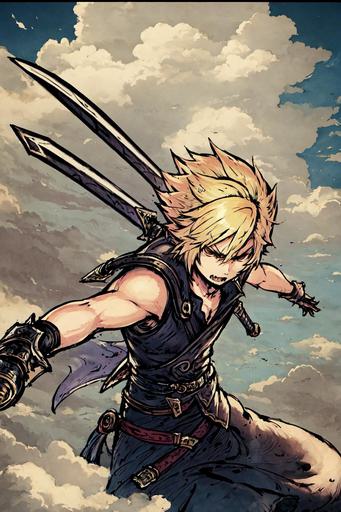

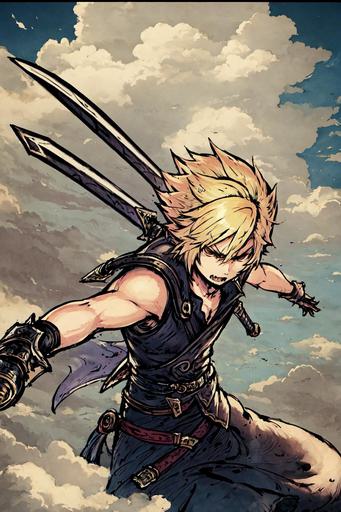

Comparison to Baselines(Single Reference)

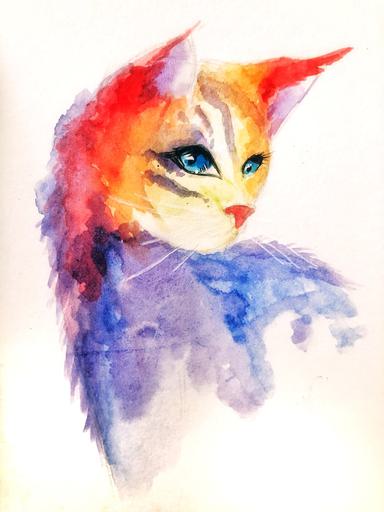

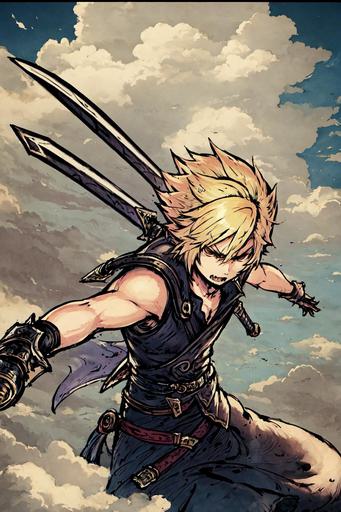

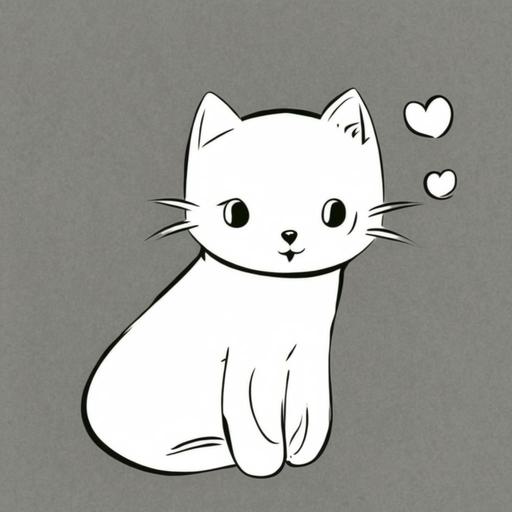

We present the comparison results of our method with other single reference style-guided Text-to-Video methods, including:

- VideoComposer[1]

- VideoCrafter*(VideoCrafter[2] equipped with GPT-4v[3])

- Gen2*(Gen2[4] equipped with GPT-4v[3])

| Style Reference | VideoComposer([1]) | VideoCrafter*([2]) | Gen2*([4]) | Ours |

|---|---|---|---|---|

| "A chef preparing meals in kitchen." | ||||

|

> | > | > | > |

| "A wolf walking stealthily through the forest." | ||||

|

> | > | > | > |

| "A field of sunflowers on a sunny day." | ||||

|

> | > | > | > |

| "A rocketship heading towards the moon." | ||||

|

> | > | > | > |

| "A bear catching fish in a river." | ||||

|

> | > | > | > |

| "A knight riding a horse through a field." | ||||

|

> | > | > | > |

| "A river flowing gently under a bridge." | ||||

|

> | > | > | > |

| "A street performer playing the guitar." | ||||

|

> | > | > | > |

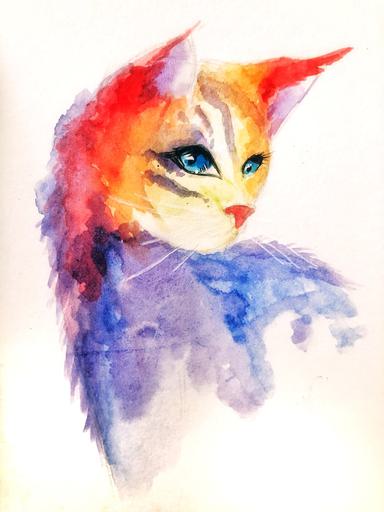

Comparison to Baselines(Multiple Reference)

We present the comparison results of our method with AnimateDiff([5]) for multi-reference style-guided Text-to-Video methods.

Our method effectively generate high-quality stylized video that align with prompts and confrom the style of reference images without any additional finetuning costs

| Style Reference | AnimateDiff([5]) | Ours(S-R) | Ours(M-R) |

|---|---|---|---|

| "A wooden sailboat docked in a harbor." | |||

|

> | > | > |

| "A student walking to school with backpack." | |||

|

> | > | > |

| "A street performer playing the guitar." | |||

|

> | > | > |

| "A wolf walking stealthily through the forest." | |||

|

> | > | > |

| "A student walking to school with backpack." | |||

|

> | > | > |

| "A knight riding a horse through a field." | |||

|

> | > | > |

| "A chef preparing meals in kitchen." | |||

|

> | > | > |

| "A rocketship heading towards the moon." | |||

|

> | > | > |

Ablation Study

we ablate the two-stage training strategy to verify its effectiveness in stylized video generation.

| Style Reference | Only Stage1 | Only Joint Training | Ours |

|---|---|---|---|

| "A student walking to school with backpack." | |||

|

> | > | > |

StyleCrafter Combined with Depth Control

We present sample results of our method combined with depth control, and compare with VideoComposer

| Style Reference | Input Depth | VideoComposer([1]) | Ours |

|---|---|---|---|

| "A tiger walks in the forest." | |||

|

> | > | > |

|

> | > | > |

| "A car turning around on a countryside road." | |||

|

> | > | > |

|

> | > | > |

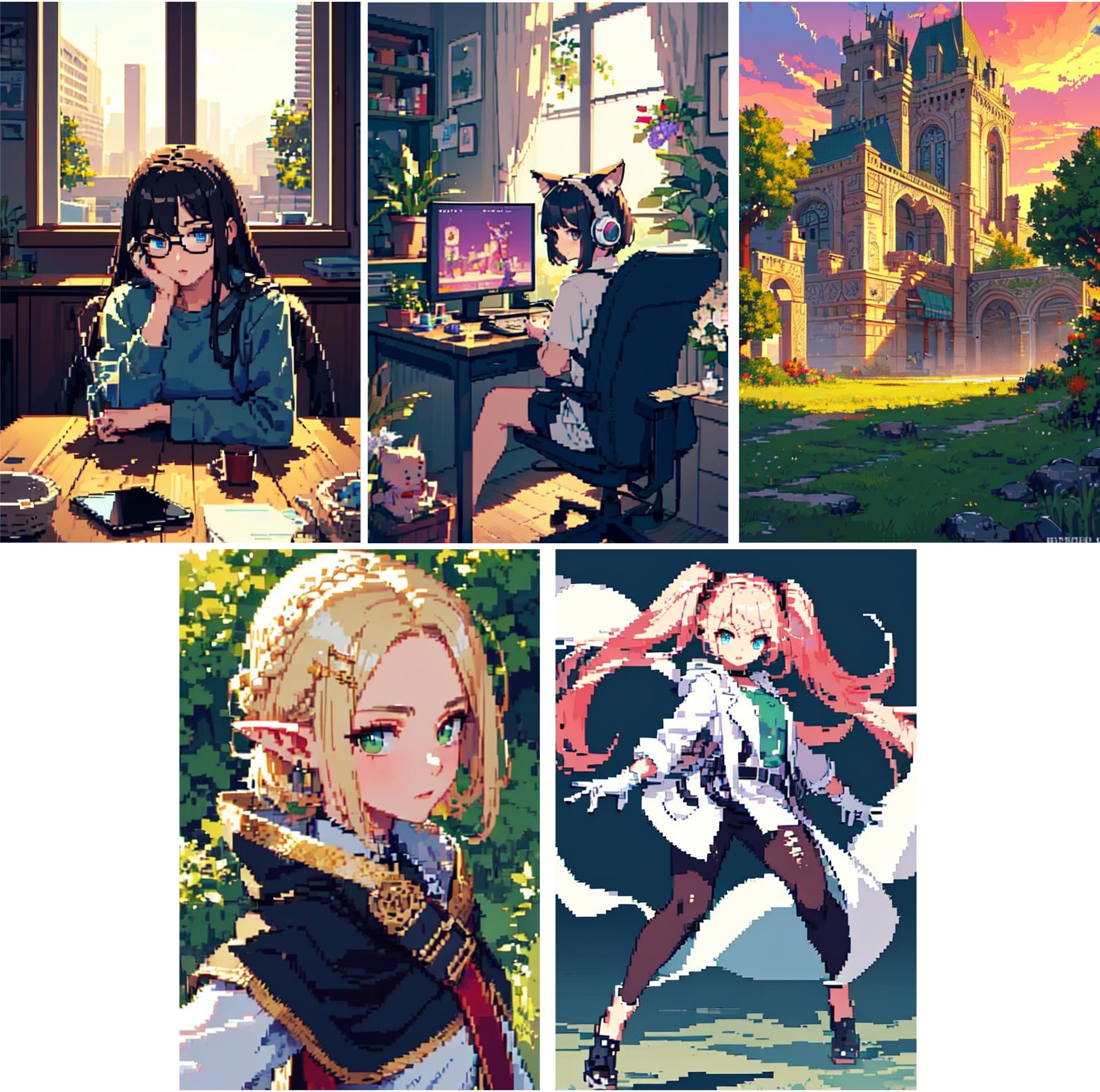

Additional Results of StyleCrafter

We present additional style-guided text-to-video generation results of our method.

| "A bear catching fish in a river." | ||||

|---|---|---|---|---|

|

> | hidden |  |

> |

|

> |  |

> | |

|

> | |||

| "A wodden sailboat docked in a harbor" | ||||

|

> |  |

> | |

|

> |  |

> | |

|

> | |||

| "A chef preparing meals in kitchen" | ||||

|

> |  |

> | |

|

> |  |

> | |

| |

> | |||

| "A campfire surrounded by tents" | ||||

|

> |  |

> | |

|

> | |

> | |

|

> | |||

References

[1] Xiang Wang, Hangjie Yuan, Shiwei Zhang, Dayou Chen, Jiuniu Wang, Yingya Zhang, Yujun Shen, Deli Zhao, and Jingren Zhou. Videocomposer: Compositional video synthesis with motion controllability. arXiv preprint:2306.02018, 2023

[2] Haoxin Chen, Menghan Xia, Yingqing He, Yong Zhang, Xiaodong Cun, Shaoshu Yang, Jinbo Xing, Yaofang Liu, Qifeng Chen, Xintao Wang, Chao Weng, and Ying Shan. Videocrafter1: Open diffusion models for high-quality video generation. preprint arXiv:2310.19512, 2023

[3] OpenAI. Gpt-4v(ision) system card. Technical report, 2023.

[4] Gen-2 contributors. Gen-2. Gen-2. Accessed Nov. 1, 2023 [Online] https://research.runwayml.com/gen2.

[5] Yuwei Guo, Ceyuan Yang, Anyi Rao, Yaohui Wang, Yu Qiao, Dahua Lin, and Bo Dai. Animatediff: Animate your personalized text-to-image diffusion models without specific tuning. arXiv preprint:2307.04725, 2023